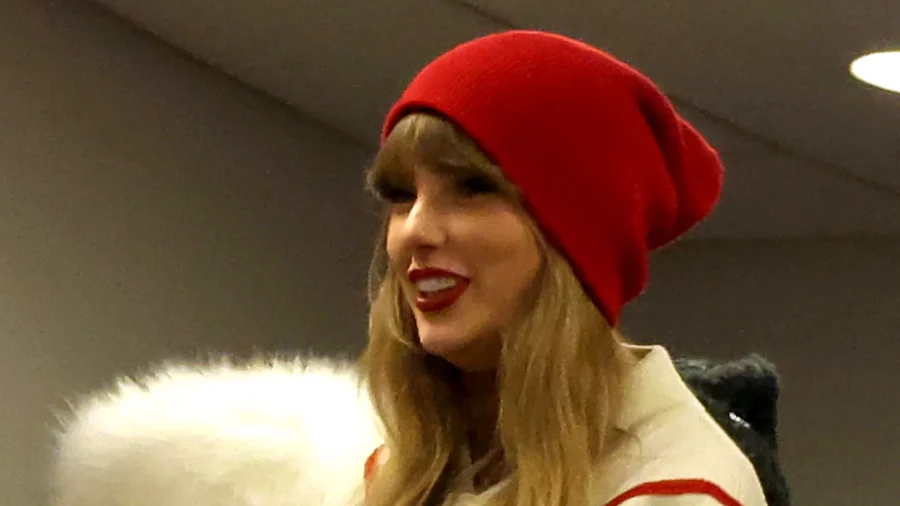

In a statement issued on Friday, the White House expressed deep concern over the proliferation of fake sexually explicit images featuring pop singer Taylor Swift on various online platforms. Press secretary Karine Jean-Pierre emphasized the vital role that social media companies play in enforcing their own rules to counter the spread of misinformation.

“This is very alarming. And so, we’re going to do what we can to deal with this issue,” Ms. Jean-Pierre said during a news briefing. She called for legislative action by Congress to address lax enforcement against false images, particularly those potentially generated by artificial intelligence, noting the disproportionate impact on women.

“Sadly, [it] disproportionately impacts women and girls—who are the overwhelming targets of online harassment and also abuse.”

“So while social media companies make their own independent decisions about content management, we believe they have an important role to play in enforcing their own rules to prevent the spread of misinformation and non-consensual, intimate imagery of real people,” Ms. Jean-Pierre stated. Efforts to address the issue face hurdles, with only nine states having laws against the creation or sharing of non-consensual deep-fake photography at the state level. Notably, there is currently no federal legislation addressing this concern.

The administration has taken recent steps to combat online harassment, including launching a task force and establishing the Department of Justice’s national 24/7 helpline for survivors of image-based sexual abuse.

The circulation of explicit images of Taylor Swift has drawn attention to the issue of AI-generated imagery and its potential consequences. Danielle Citron, a professor at the University of Virginia School of Law, highlighted the widespread nature of this problem affecting not just celebrities but everyday individuals, including high school students and professionals.

While the use of AI to manipulate faces and create misleading content is not new, Ms. Swift’s case has brought renewed focus to the challenges posed by AI-generated imagery. In an interview with CNN, Ms. Citron noted the importance of public attention, stating, “It’s a reckoning moment.”

The fake images of Taylor Swift, primarily spread on the social media platform X (formerly Twitter), raised questions about content moderation practices. Social media companies, including X, face criticism for relying on automated systems and user reporting, with concerns about the effectiveness of their content moderation efforts.

The ubiquity of these deceptive images raises calls for comprehensive legislative measures to address the far-reaching consequences of AI-generated content on personal lives and social dynamics.

In a parallel incident in February 2023, the gaming community faced a similar challenge when a high-profile male video game streamer on the popular platform Twitch was caught viewing deep-fake videos of his female Twitch streaming colleagues. The streamer known as “Sweet Anita” described the experience as “very, very surreal” to CNN, stressing the unsettling nature of witnessing oneself in manipulated content.

Despite efforts by Ms. Swift’s fan base to take down the offending posts, the incident underscores the challenges posed by AI-generated content and the need for enhanced regulations and protections. As discussions on AI-generated imagery gain momentum, experts are calling for legislative changes, including potential revisions to Section 230 of the Communications Decency Act, which shields online platforms from liability over user-generated content, to address the growing threats posed by non-consensual deep-fake imagery.

From The Epoch Times